A proof of concept involves finetuning Meta's llama-7b using a custom Datacom dataset that I generated with the self-instruction techniques. I believe that locally hosted models have the potential to serve as interesting alternatives to OpenAI API calls and vector databases for constructing assistants aimed at assisting with specific tasks and providing information.

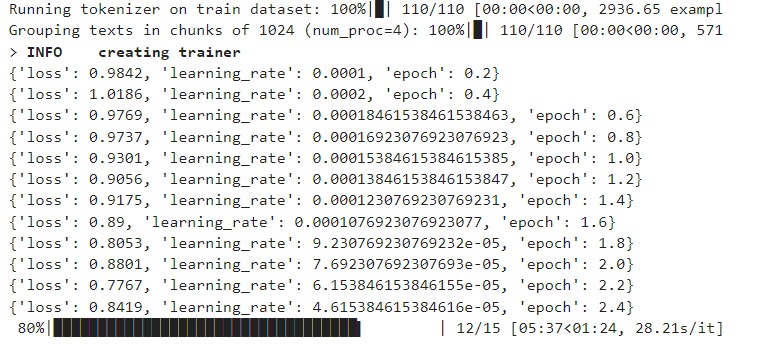

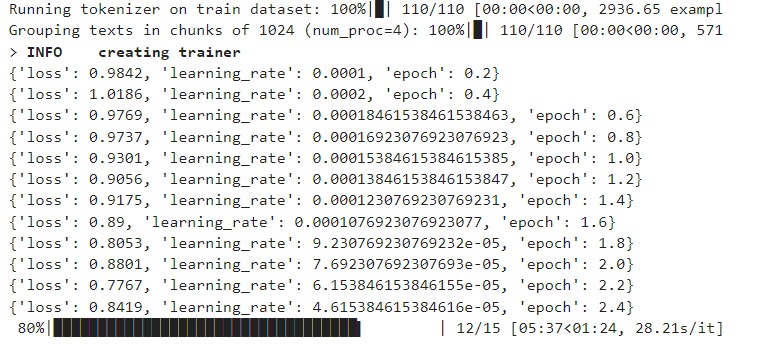

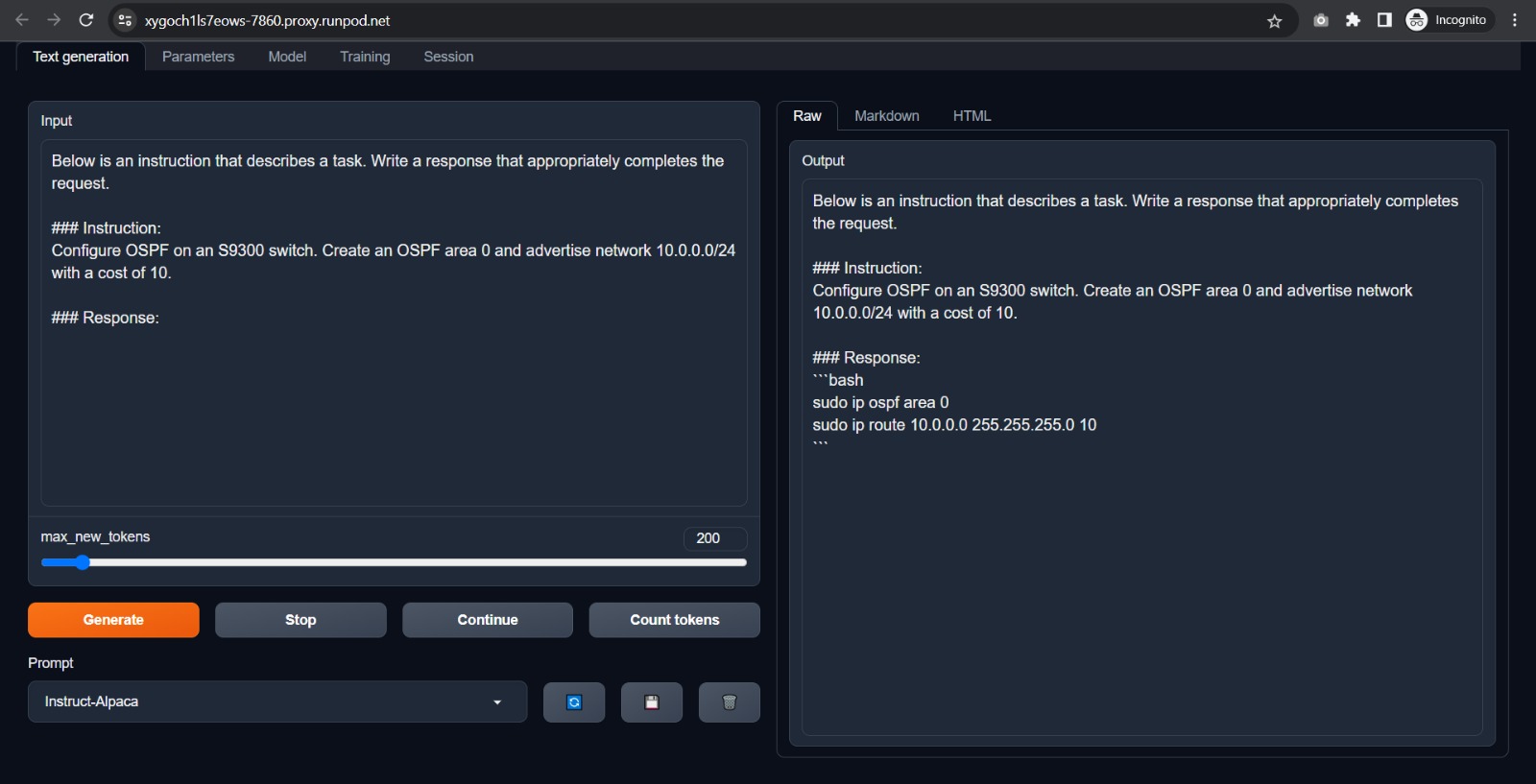

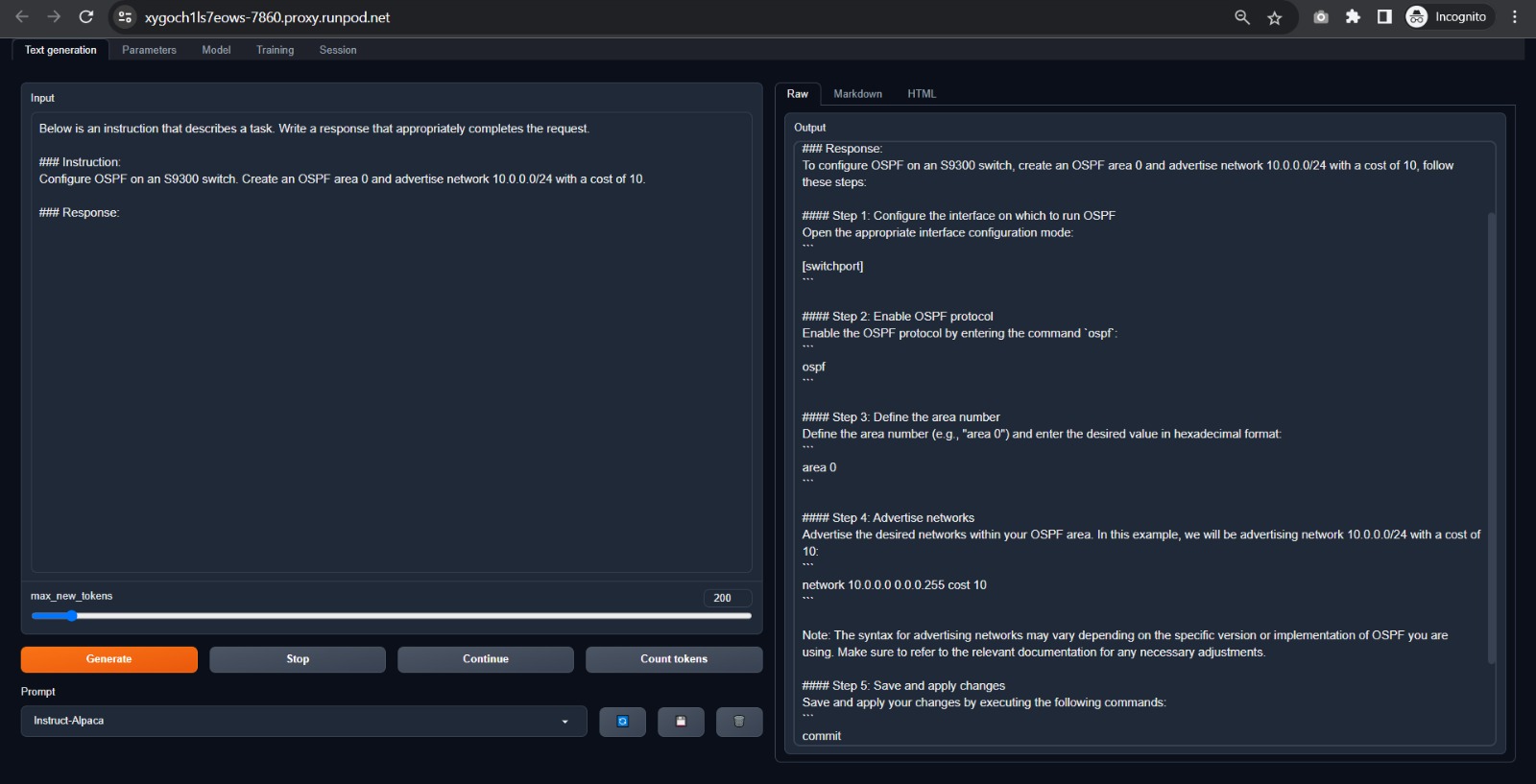

I fully expected complete gibberish from the first attempt, but I am pleasantly surprised by the quality of the results:

| Base Llama2 | llama-2-datacom |

|---|---|

|

|

Clearly, base Llama 7b has no idea what's going on and can't provide information about datacom configurations. However, when augmented with llama-2-datacom, it has no issues generating high quality responses that are clearly derived from the new datacom dataset.

Being a language model, llama-2-datacom is prone to hallucinations and can make up details or give incorrect information. That being said, this is still very much in the early stages, and the output could be improved and refined in a number of ways. The obvious thing being that it's not really advisable to use the default chat assistant from the webui for prompting. Lastly, there is certainly room for improvement in enhancing the dataset, those who are interested can contribute by submitting pull requests.

This project is licensed under the MIT License.

Wouldn't have thought of this without Stanford's Alpaca research. A special thank you goes to them for contributing the script to generate the dataset and the code for the training.